PROJECT: introduction

To command robots just by thought is a technical and social dream that is turning to be a reality due to the recent advances in brain-machine interaction and robotics. On the one hand, brain-computer interfaces provide a new communication channel for patients with severe neuromuscular disabilities bypassing the normal output pathways, and also can be used for healthy subjects. On the other hand, robotics provides a physical entity embodied in a given space ready to perceive, explore, navigate and interact with the environment. Certainly, the combination of both areas opens a wide range of possibilities in terms of research and applications.

One of the major goals for human applications is to work with non-invasive methods (non intracraneal), where the most popular is the electroencephalogram (EEG). So far, systems based on human's EEG have been used to control a mouse on the screen [1], for communication like an speller [2], an internet browser [3], etc. Regarding brain-actuated robots, the first control was demonstrated in 2004 [4], and since then, the research has focused on wheelchairs [5], [6], manipulators [7] and small-size humanoids [8]. All these developments share an important property: the user and the robot are placed in the same environment.

A break through in brain-teleoperated robots arose in January 2008, when a cooperation between two research teams enabled a monkey in the USA to control the lower part of a humanoid in Japan [9] via internet. The recording method to measure the brain activity was invasive, thus they practiced a craneotomy to the animal. So far, there is no evidence of another similar result in humans and with non-invasive methods. Notice that the ability to brain-teleoperate a robot in a remote scenario opens a new dimension of possibilities for patients with severe neuromuscular disabilities. This web reports the first EEG-based human brain-actuated teleoperation between remote places.

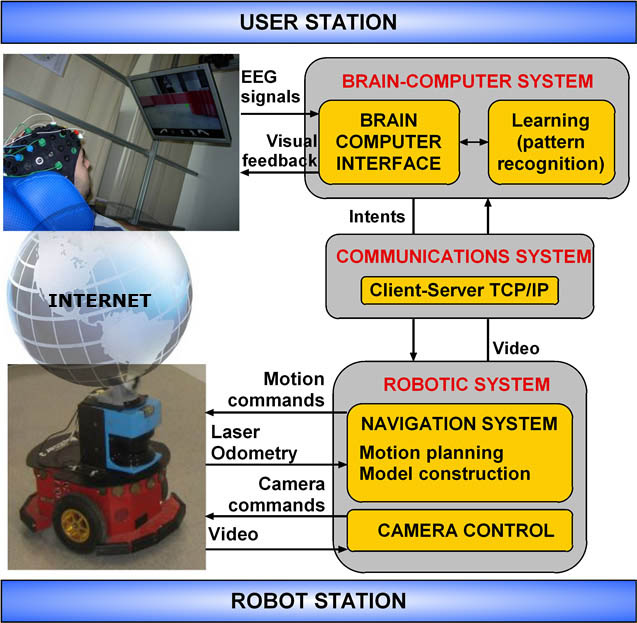

The brain-actuated teleoperation system described here relies on a user station and a robot station, both remotely located but connected via internet (Figure 1).

Fig. 1. This figure displays the design of the brain-actuated robot, the two stations, the main systems and the information flow among them.

This teleoperation system allows performing navigation and visual exploration tasks, even in unknown environments. There are two teleoperation modes: robot navigation and camera control. In both modes, the subject faces a real-time video captured by the robot camera combined with augmented reality. Over this representation, the subject concentrates on an area of the space to reach with the robot or to explore with the camera. A visual stimulation process elicits the neurological phenomenon and the brain-computer system detects the target area. In the navigation mode, the target destination is transferred to the autonomous navigation system, which drives the robot to the desired place while avoiding collisions with the obstacles detected by the laser scanner. In the camera mode, the camera is aligned with the target area to perform visual active exploration of the scenario. We have validated the prototype with five healthy subjects in two consecutive steps: (i) screening plus training of the users, and (ii) pre-established navigation and visual exploration teleoperation tasks for one week between two cities located at 260km. On the basis of the experiments, we report a technical evaluation of the device and a coherence study between trials and subjects.