Semantic and structural image segmentation for prosthetic vision

Melani Sanchez-Garcia, Ruben Martinez-Cantin, Jose J. Guerrero

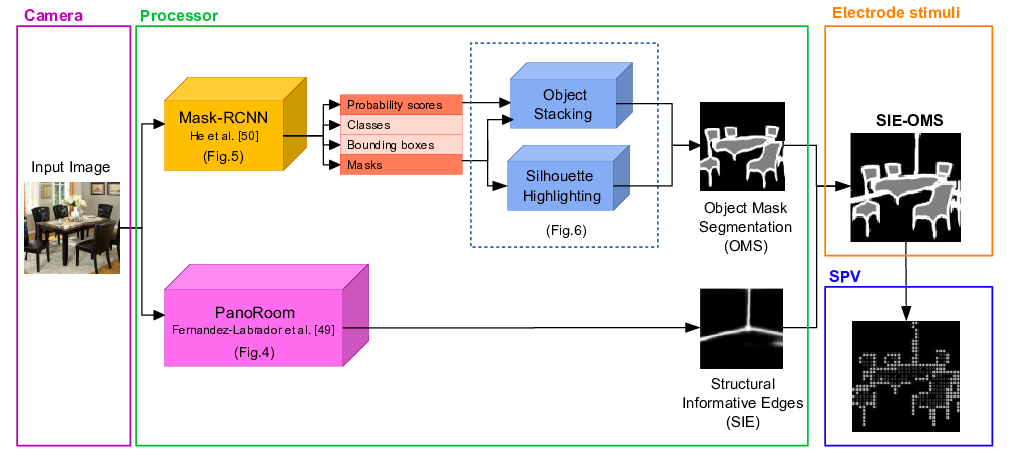

Prosthetic vision is being applied to partially recover the retinal stimulation of visually impaired people. However, the phosphenic images produced by the implants have very limited information bandwidth due to the poor resolution and lack of color or contrast. The ability of object recognition and scene understanding in real environments is severely restricted for prosthetic users. Computer vision can play a key role to overcome the limitations and to optimize the visual information in the simulated prosthetic vision, improving the amount of information that is presented. We present a new approach to build a schematic representation of indoor environments for phosphene images. The proposed method combines a variety of convolutional neural networks for extracting and conveying relevant information about the scene such as structural informative edges of the environment and silhouettes of segmented objects. Experiments were conducted with normal sighted subjects with a Simulated Prosthetic Vision system. The results show good accuracy for object recognition and room identification tasks for indoor scenes using the proposed approach, compared to other image processing methods.

Citation

Melani Sanchez-Garcia, Ruben Martinez-Cantin and Jose J. Guerrero (2020) Semantic and structural image segmentation for prosthetic vision. PLOS One, 15(1):e0227677. (PDF) (Project) (BibTeX)