| Javier Civera |

|

||

| NEWS | ||

| December 2016. We released a dataset for object learning from multimodal human-robot interaction, as part of the CHISTERA-IGLU project. Find all the details below. IGLU, standing for "Interactive Grounded Language Understanding", aims to robotic knowledge acquisition, modelling and grounding from multimodal human-robot interaction. I believe that interaction is needed for small data contexts, and that using deep learning with spatio-temporal, multimodal data could improve even more its performance. Our dataset is the first of its class, including audio+RGB-D synchronized recordings of several users naturally interacting with objects (pointing, showing, telling their names) from a robocentric point of view. Our goal is to build visual object models from such interactions. Pablo Azagra, Yoan Mollard, Florian Golemo, Ana Cristina Murillo, Manuel Lopes, Javier Civera

A Multimodal Human-Robot Interaction Dataset (pdf) (poster) (link to the dataset) (video) Workshop on the Future of Interactive Learning Machines (FILM), together with NIPS 2016 Barcelona, Spain, 2016. |

||

|

|

||

| November 2016. Very happy with our first steps towards fusing deep learning and multiview geometry for monocular mapping! Fusing depth patterns with the depth estimated from multiple views has a huge potential to produce accurate and dense reconstructions from a monocular camera. Find all the details in our arXiv paper and video. José M. Fácil, Alejo Concha, Luis Montesano, Javier Civera

Deep Single and Direct Multi-View Depth Fusion (arxiv link) (video) arXiv:1611.07245, 2016. |

||

|

|

||

| November 2016. I was invited as a keynote speaker to the International Conference on Cloud and Robotics (ICCR 2016), in Saint Quentin, France. I had an excellent time with excellent researchers, discussing the huge potential of the Cloud in our field. Thank you very much for the invitation, Yulin Zhang! |

||

|

|

||

| March 2016. I am co-editing with Jeannette Bohg, Matei Ciocarlie and Lydia E. Kavraki an Special Issue on Big Data for Robotics, for the Big Data journal. The deadline for submission is June 15th, find the rest of the details below Jeannette Bohg, Matei Ciocarlie, Javier Civera, Lydia E. Kavraki

Special Issue on Big Data for Robotics Big Data, Mary Ann Liebert, Inc. publishers Deadline for paper submissions: June 15st, 2016. Full Call for Papers here. |

||

|

|

||

| February 2016. Alejo Concha and myself, in collaboration with Giuseppe Loianno and Vijay Kumar from UPenn, combined the recent direct monocular SLAM algorithms with IMU measurements and developed one of the very first Visual-Inertial Dense SLAM systems. The system runs in real-time at video rate on a standard CPU. Its main strengths over pure monocular sensing are a higher degree of robustness and the estimation of the real scale of the maps and camera motion --both essential for robotics applications. The paper summarizing this research was accepted at ICRA 2016, find the details there and an illustrative video here. |

||

|

|

||

| January 2016. Our WACV submission, a great work leaded by Wajahat Hussain, was accepted. We show how learning view-invariant features helps in retrieval and text spotting when you have small data or missing training spots. The view-invariance is achieved by leveraging single-view geometry to rectify image patterns into canonical fronto-parallel views. It is very interesting to see how 3D cues can help in pattern discovery and recognition! UPDATE: We compiled all the material related with this paper in this project page https://sites.google.com/site/smalldatawacv16/, including the Matlab code (https://bitbucket.org/nonsemantic/novel_views/get/master.zip) and the Hotels dataset (https://bitbucket.org/nonsemantic/hotels_dataset_wacv/get/master.zip). Wajahat Hussain,

Javier Civera, Luis Montano and Martial Hebert

Dealing with Small Data and Training Blind Spots in the Manhattan World (pdf) (project page) (code) (Hotels dataset) IEEE Winter Conference on Applications of Computer Vision, (WACV16), Lake Placid, NY, USA, 2016. |

||

|

|

||

| November 2015. Alejo Concha released the code of our DPPTAM system, presented at IROS 2015. DPPTAM is a dense, piecewise-planar monocular SLAM system that runs in real-time on a CPU. Find the paper here, a Youtube video here and the code in github at https://github.com/alejocb/dpptam |

||

|

|

||

| October 2015. I have been invited to the Qualcomm AR Lecture Series in Vienna on Nov. 19th. Eager to discuss about vision research and AR applications with them! |

||

|

|

||

| September 2015. We just released S-PTAM, a feature-based stereo SLAM system. Find the code here: And an illustrative video in this Youtube link: https://youtu.be/kq9DG5PQ2k8 This is the result of a collaboration with Taihú Pire, Thomas Fischer, Pablo de Cristóforis and Julio Jacobo, from the Robotics and Embedded Systems Lab in the University of Buenos Aires, Argentina. Find more details in our IROS15 paper: Taihú Pire, Thomas

Fischer, Javier Civera, Pablo de Cristóforis, Julio César Jacobo Berlles

Stereo Parallel Tracking and Mapping for Robot Localization (pdf) IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS15), Hamburg, Germany, 2015 |

||

|

|

||

| July 2015. 3 IROS papers accepted! :D |

||

|

|

||

| June 2015. Together with Giuseppe Serra, Rita Cucchiara and Kris Kitani we are organizing a Special Issue on Wearable and Ego-vision Systems for Augmented Experience, for the IEEE Transactions on Human-Machine Systems. The relevant info is below: Giuseppe Serra, Rita Cucchiara, Kris M.

Kitani, Javier Civera

Special Issue on Wearable and Ego-vision Systems for Augmented Experience IEEE Transactions on Human-Machine Systems Paper submissions are due October 1st, 2015. The issue will be published in 2016. Download the Call for Papers here. |

||

|

|

||

| May 2015. I will be at OCEANS 2015 MTS/IEEE to present our work using direct monocular SLAM methods on underwater sequences, in collaboration with Paulo Drews-Jr, from Universidade Federal do Rio Grande in Brazil, and Mario Campos, from Universidade Federal de Minas Gerais in Brazil. Alejo Concha, Paulo

Drews-Jr, Mario Campos and Javier Civera

Real-Time Localization and Dense Mapping in Underwater Environments from a Monocular Sequence (draft) MTS/IEEE OCEANS, Genova, Italy, 2015. |

||

|

|

||

| April 2015. Our Special Issue on Cloud Robotics and Automation at IEEE Transactions on Automation Science and Engineering is out! After a year and a half of hard work, I am happy with the result and happy to be involved in such an exciting topic. |

||

|

|

||

| January 2015. I joined the Editorial Board of the IEEE Transactions on Automation Science as an Associate Editor for the next 3 years :) |

||

|

|

||

| July 2014.

From July 28th to August 2nd I will be giving a week-long course on robotic vision at the Escuela de Ciencias Informáticas 2014 in Buenos Aires, Argentina. Thanks to the Computer Science department in the University of Buenos Aires for the invitation! |

||

|

|

||

| June 2014.

On June 30th, I am giving an invited talk at MUVS (Multi Unmanned Vehicle Systems) 2014, in Compiegne, about robotic vision. The workshop looks extremely interesting; it is directed both to industry and academia, and it addresses the vision, control and network challenges of unmanned vehicles. Can't wait to be there! |

||

|

|

||

| May 2014. Very happy with our accepted paper at RSS 2014! We basically integrate Manhattan and piecewise-planar constraints in the variational approach to monocular mapping. Such constraints are of importance in indoor and outdoor man-made scenes, where the photometric constraints and standard regularization can lead to inaccurate results in areas of low texture. Our approach shows to reduce the errors in a room-sized environment in a factor 5x. More details in the paper and the video: |

||

|

|

||

| April 2014.

Together with Wajahat Hussain and Luis Montano, I have been working on room layout estimation from audiovisual data. We got a paper accepted at AAAI 2014 Wajahat Hussain,

Javier Civera and Luis Montano

Grounding Acoustic Echoes in Single View Geometry Estimation (pdf) Twenty-Eighth Conference on Artificial Intelligence (AAAI-2014), Québec City, Canada, 2014. We are quite excited about the possibilities of this new line of research! Audio is a quite unexplored data format that is present in most portable devices today (smartphones, kinect, laptops...). |

||

|

|

||

| February 2014.

My paper with Alejo Concha on the use of superpixels in monocular SLAM was accepted for ICRA 2014 (UPDATE: And nominated for the Best Vision Paper Award!!). Find the paper here Alejo Concha and

Javier Civera

Using Superpixels in Monocular SLAM (pdf draft)(video) IEEE International Conference on Robotics and Automation (ICRA) 2014. Code to be released soon! |

||

|

|

||

| November 2013.

Our proposal for doing SLAM in the Cloud has been accepted in Robotics and Autonomous Systems. Luis Riazuelo, Javier

Civera, J. M. M.

Montiel

C2TAM: A Cloud Framework for Cooperative Tracking and Mapping (pdf draft)(video 1)(video 2) Robotics and Autonomous Systems, accepted for publication in 2013. Code available at https://sites.google.com/site/c2tamvisualslam/ |

||

|

|

||

| October 2013.

Following our Cloud Robotics Workshop at IROS 2013, I am co-organizing a special issue on Cloud Robotics and Automation at IEEE Transactions on Automation Science and Engineering, with Markus Waibel (ETHZ), Matei Ciocarlie (Willow Garage), Alper Aydemir (NASA/JPL), Kostas Bekris (Rutgers University) and Sanjay Sarma (MIT) (and the invaluable help of IEEE T-ASE Editor-in-Chief Ken Goldberg). Find the Call for Papers here. |

||

|

|

||

| June 2013.

I am co-organizing a workshop at ICCV 2013 on Wearable Vision, together with my colleagues Ana Cristina Murillo from my group in the University of Zaragoza and Mohammad Moghimi and Serge Belongie from University of California San Diego. I am quite excited to be involved in such a promising topic; and also to have as invited speakers Kristen Grauman, Takeo Kanade, Peyman Milanfar, Jim Rehg and Bruce Thomas. I am sure it will be a great venue! |

||

|

|

||

| April 2013.

I am co-organizing a workshop at IROS 2013 on Cloud Robotics, together with my RoboEarth colleague Markus Waibel (ETHZ), Alper Aydemir (KTH), Ken Goldberg (Berkeley) and Matei Ciocarlie (Willow Garage). Check the workshop web for updates: I hope to see you there if you go to IROS, it will be an excellent opportunity to learn and discuss about the great potential of Cloud Computing for robots! |

||

|

|

||

| November 2012.

I am looking for a PhD student on SLAM with a wearable camera, starting January 2013. Please, click here for more details on the position and how to apply. |

||

|

|

||

| June 2012.

I will be at CVPR 2012 with the paper Alessandro Prest,

Christian Leistner, Javier Civera, Cordelia Schmid, Vittorio Ferrari

Learning Object Class Detectors from Weakly Annotated Video (pdf) CVPR 2012. Thank you very much Alessandro Prest, Christian Leistner, Cordelia Schmid and Vitto Ferrari for the opportunity of working with you, I learned a lot from you all. |

||

|

|

||

| May 2012. I gave a SLAM course in the Mechatronics department at Tallinn University of Technology. I really enjoyed my time there, thank you very much Professor Mart Tamre and Dmitry Shvarts for your kind invitation! | ||

|

|

||

| April 2012. A year went by so fast!! An extremely busy and exciting year, with many news but no time to update them here: I had an excellent time during my 4 months visit at Calvin group, ETHZ. I became an associate professor at Universidad de Zaragoza, Spain. I coauthored an IJCV paper with Joan Solà, Teresa Vidal-Calleja and J. M. M. Montiel. My PhD thesis was published by Springer... | ||

|

|

||

| April 2011.

I am organizing, together with Ana Cristina Murillo, Kurt

Konolige and Jana

Kosecka the 1st IEEE Workshop on

Challenges and Opportunities in Robots Perception, held jointly

with ICCV 2011 in Barcelona. Please, consider submitting a paper if you are working on any topic related with robotic perception. Deadline is July 1. For more information, visit our web: http://robots.unizar.es/corp |

||

|

|

||

| March 2011. I am in ETH in Zürich on a research visit until July, working with Professor Vittorio Ferrari. | ||

|

|

||

| January 2011. My PhD thesis was accepted for publication in the series Springer Tracts in Advanced Robotics. | ||

|

|

||

| December 2010. I started co-advising the PhD thesis of Mr. Wajahat Hussain together with Prof. Luis Montano. | ||

|

|

||

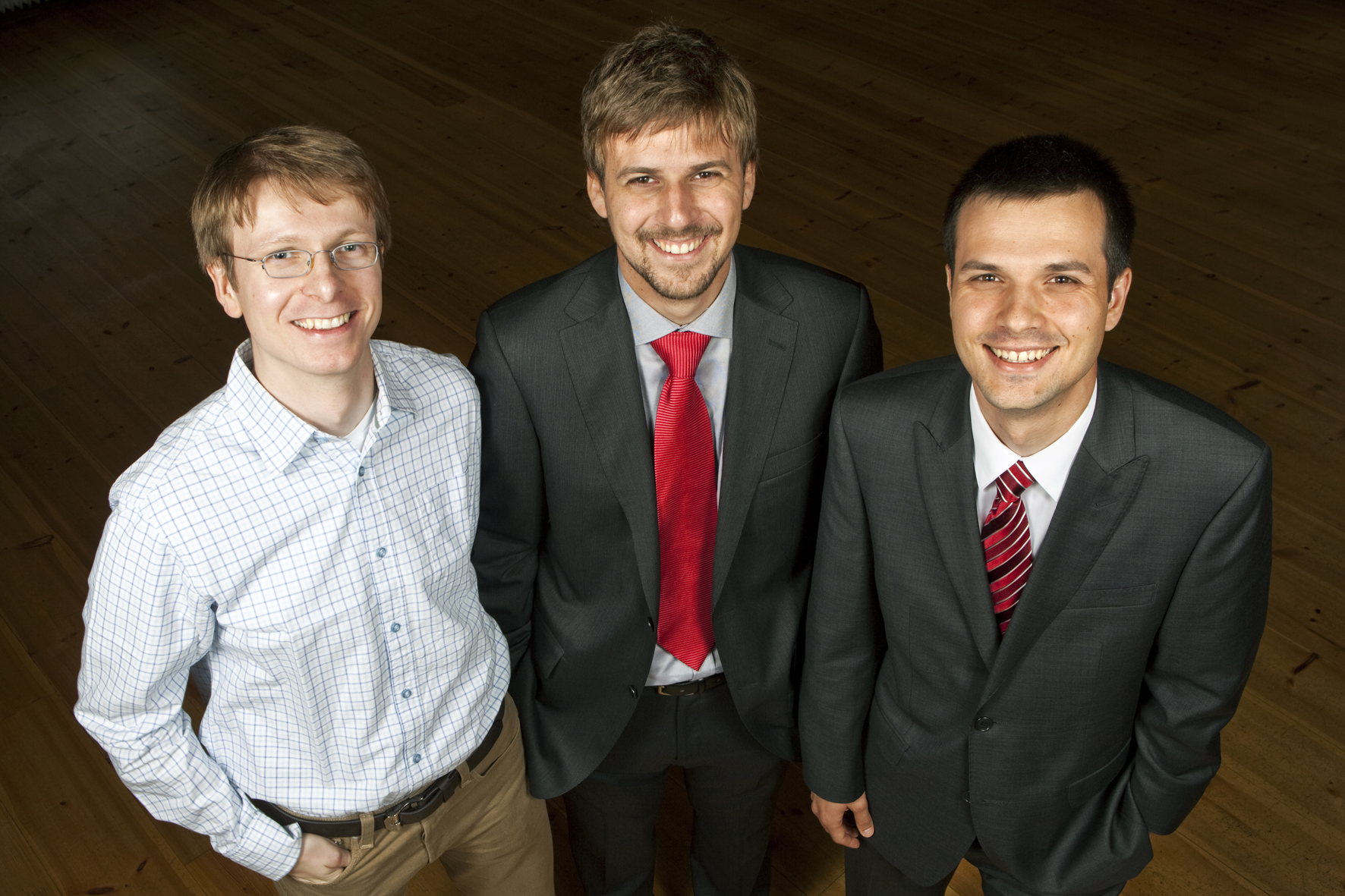

| September 2010. I was proud to be a finalist for the Robotdalen Award, one of the most prestigious international awards for PhD theses in Robotics and Automation. In the picture I am with Uwe Mettin (left), now in Trondheim; and Matei Ciocarlie (right), the winner, now at Willow Garage. | ||

Picture taken by the photographer Terése Andersson. |

||

|

|

||

| May 2010. I released some Matlab code from my PhD thesis under GPL License --inverse depth and 1-point RANSAC--. Find it here. I also inaugurated a news section in my web :) |