News

- February, 2017: Code available (see Downloads).

- November, 2016: Paper and supplementary materials available (see Downloads).

- November, 2016: Web launched.

Abstract

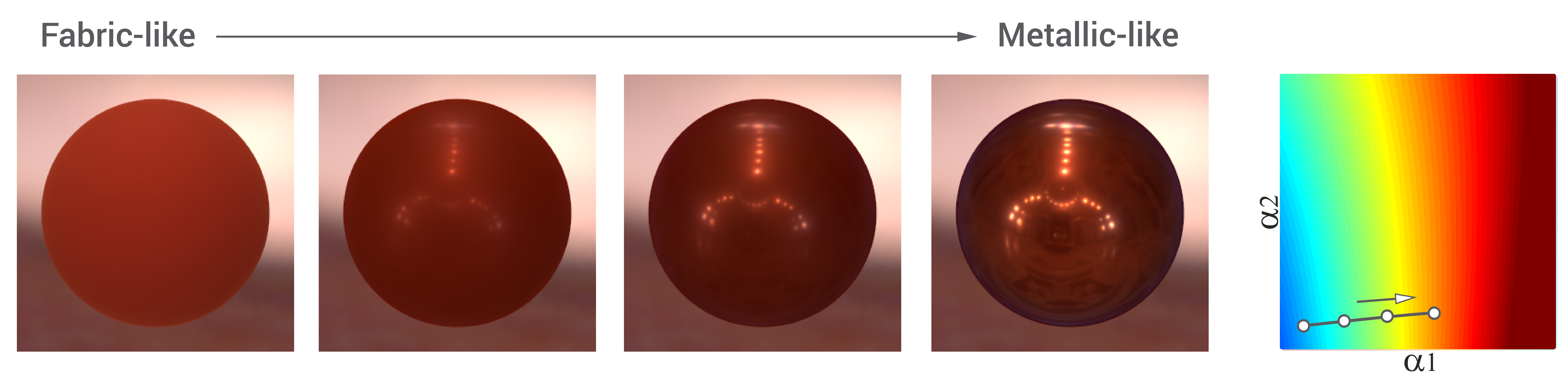

Many different techniques for measuring material appearance have been proposed in the last few years. These have produced large public datasets, which have been used for accurate, data-driven appearance modeling. However, although these datasets have allowed us to reach an unprecedented level of realism in visual appearance, editing the captured data remains a challenge. In this paper, we present an intuitive control space for predictable editing of captured BRDF data, which allows for artistic creation of plausible novel material appearances, bypassing the difficulty of acquiring novel samples. We first synthesize novel materials, extending the existing MERL dataset up to 400 mathematically valid BRDFs. We then design a large-scale experiment, gathering 56,000 subjective ratings on the high-level perceptual attributes that best describe our extended dataset of materials. Using these ratings, we build and train networks of radial basis functions to act as functionals mapping the perceptual attributes to an underlying PCA-based representation of BRDFs. We show that our functionals are excellent predictors of the perceived attributes of appearance. Our control space enables many applications, including intuitive material editing of a wide range of visual properties, guidance for gamut mapping, analysis of the correlation between perceptual attributes, or novel appearance similarity metrics. Moreover, our methodology can be used to derive functionals applicable to classic analytic BRDF representations. We release our code and dataset publicly, in order to support and encourage further research in this direction.

Downloads

- Paper [PDF, 78.88 MB]

- Supplementary material [PDF, 119.66 MB]

- Slides [PPTX, 56.3 MB]

- Code and training dataset [RAR, 1.26 GB]

- Extended MERL dataset [RAR, 2.51 GB] Generated BRDFs as combinations of BRDFs from the MERL dataset

The code and dataset provided are property of Universidad de Zaragoza - free for non-commercial purposes

Bibtex

Related

- 2017: Attribute-preserving gamut mapping of measured BRDFs

- 2017: Intuitive Editing of Visual Appearance from Real-World Datasets

Acknowledgements

We thank the members of the Graphics & Imaging Lab for fruitful insights and discussion, and in particular Elena Garces, Adrian Jarabo, Carlos Aliaga, Alba Samanes, and Cristina Tirado. We would also like to thank Oleksandr Sotnychenko. This research has been funded by the European Research Council (Consolidator Grant, project Chameleon, ref. 682080), as well as the Spanish Ministry of Economy and Competitiveness (project LIGHTSLICE, ref. TIN2013-41857-P). Belen Masia and Ana Serrano would like to acknowledge the support of the Max Planck Center for Visual Computing and Communication. Ana Serrano was additionally supported by an FPI grant from the Spanish Ministry of Economy and Competitiveness.