Understanding Error-Related Potentials

|

|

| (a) | (b) |

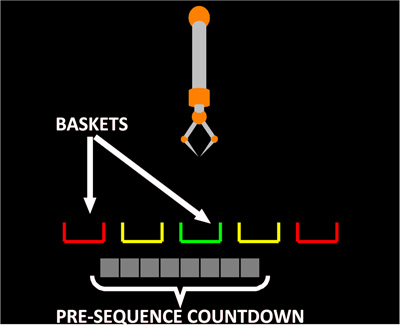

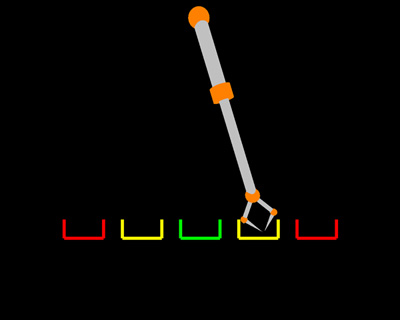

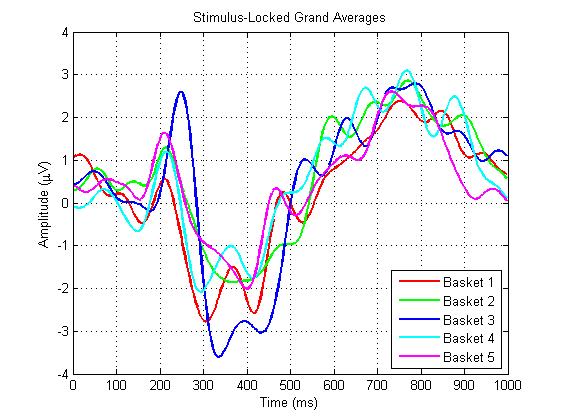

The general setting of the experiment was a subject observing a virtual robot on a screen performing a reaching task while the EEG was recorded. The robot had two degrees of freedom (see (a)): a revolute joint located at the base of the arm that rotated the full arm and a prismatic joint that made the arm longer. Five different actions moved the robot gripper to each of the five predefined areas (marked as baskets). The subject was instructed to judge the robot motion (see (b)) as follows: (i) a motion towards the central basket is interpreted as a correct operation, (ii) a motion towards the baskets placed just on the side (left or right) of the central one is a small operation error, and (iii) a motion towards the outside baskets is a large operation error. Notice that this protocol includes error vs. no errors, plus different levels of operation error and different error locations. The use of a simulated environment allows us to isolate problems (such as robot synchronization, time delays, etc), ensures repeatability among subjects, speeds up the experimentation phase and facilitates the evaluation and characterization of the ERP activity. Two participants participated in the experiments. The recording session consists of several sequences of actions observed by the subject. Each sequence starts with a five seconds countdown preparing the subject for the operation. A sequence is composed of 10 movements. Each movement starts with the robot at the initial position for one second, and then switches the arm to one of the five final positions. After another second, it returns to the initial position and repeats the process. The instantaneous motion between the initial and final positions eliminates the effect of continuous operation and provides a clear trigger of the ERP.

Brain-Controlled Wheelchair

We developed a new non-invasive brain-actuated wheelchair that relies on a P300 neurophysiological protocol and automated navigation. When in operation, the user faces a screen displaying a real-time virtual reconstruction of the scenario and concentrates on the location of the space to reach. A visual stimulation process elicits the neurological phenomenon, and the electroencephalogram (EEG) signal processing detects the target location. This location is transferred to the autonomous navigation system that drives the wheelchair to the desired location while avoiding collisions with obstacles in the environment detected by the laser scanner. This concept gives the user the flexibility to use the device in unknown and evolving scenarios. The prototype was validated with five healthy participants in three consecutive steps: screening (an analysis of three different groups of visual interface designs), virtual-environment driving, and driving sessions with the wheelchair. We performed the following evaluation studies: 1) a technical evaluation of the device and all functionalities; 2) a users’ behavior study; and 3) a variability study. The overall result was that all the participants were able to successfully operate the device with relative ease, thus showing a great adaptation as well as a high robustness and low variability of the system.

Media coverage

New Scientist articleEngadget

Heraldo de Aragón (in spanish)