D-TOP: Deep Reinforcement Learning with Tuneable Optimization for Autonomous Navigation

Abstract

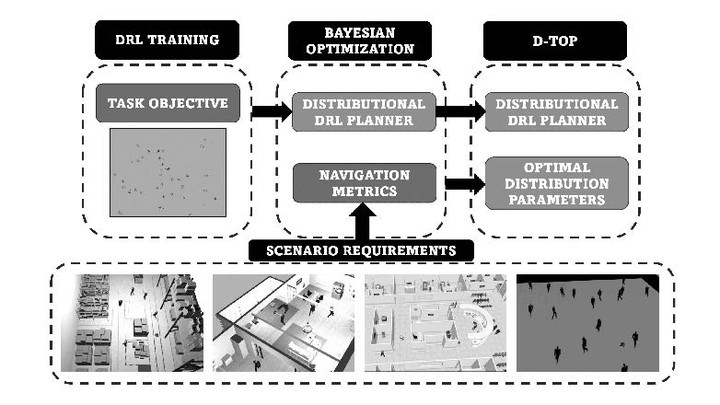

The field of autonomous navigation has seen significant advancements in recent years, with deep reinforcement learning (DRL) algorithms demonstrating impressive capabilities in various settings over traditional methods. DRL algorithms plan their motion according to what they have experienced during training, trying to optimize the task encoded in the reward. However, DRL methods do not have the ability to change the behavior of autonomous robots based on the environment; which is crucial to, for example, ensure safe and understandable interactions around humans or efficient trajectories in open spaces. These behaviors are encoded with navigation metrics that are directly or indirectly recorded with the task. The only way to do so is retraining the agent with other reward function, which requires long computational time, complex reward shaping and reward engineering. In this article, we propose a novel autonomous navigation approach that uses an attention-based network and distributional DRL to estimate the probability distribution of the values of the possible velocities of the robot. Then, we propose a framework to modify its action selection with adjustable parameters that depend on the probability distribution. This process enables to tailor the agent’s behavior to specific navigation metrics. We offer experiments that validate our method, comparing it to other planners of the state of the art and showing that our method can find different optimal behaviors depending on the scenario needs.