Efficient tool segmentation for endoscopic videos in the wild

Abstract

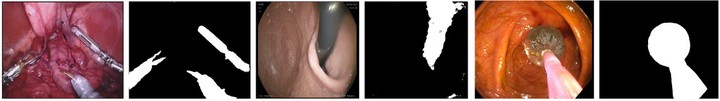

In recent years, deep learning methods have become the most effective approach for tool segmentation in endoscopic images, achieving the state of the art on the available public benchmarks. However, these methods present some challenges that hinder their direct deployment in real world scenarios. This work explores how to solve two of the most common challenges, real-time and memory restrictions and false positives in frames with no tools. To cope with the first case, we show how to adapt an efficient general purpose semantic segmentation model. Then, we study how to cope with the common issue of only training on images with at least one tool. Then, when images of endoscopic procedures without tools are processed, there are a lot of false positives. To solve this, we propose to add an extra classification head that performs binary frame classification, to identify frames with no tools present. Finally, we present a thorough comparison of this approach with current state of the art on different benchmarks, including real medical practice recordings, demonstrating similar accuracy with much lower computational requirements.