(presented at SMI 2019)

Combining Voxel and Normal Predictions for Multi-View 3D Sketching

Abstract

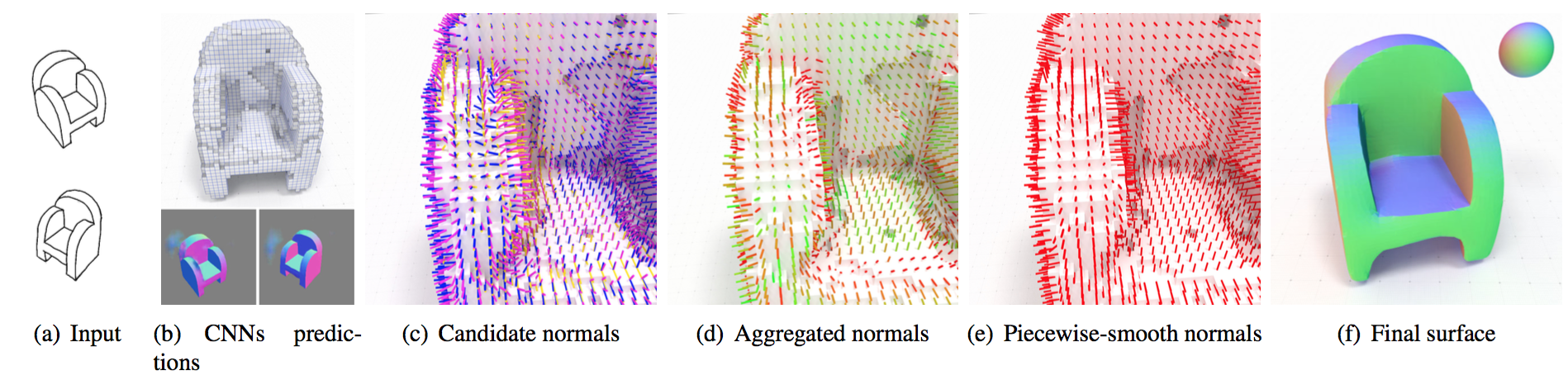

Recent works on data-driven sketch-based modeling use either voxel grids or normal/depth maps as geometric representations compatible with convolutional neural networks. While voxel grids can represent complete objects-including parts not visible in the sketches-their memory consumption restricts them to low-resolution predictions. In contrast, a single normal or depth map can capture fine details, but multiple maps from different viewpoints need to be predicted and fused to produce a closed surface. We propose to combine these two representations to address their respective shortcomings in the context of a multi-view sketch-based modeling system. Our method predicts a voxel grid common to all the input sketches, along with one normal map per sketch. We then use the voxel grid as a support for normal map fusion by optimizing its extracted surface such that it is consistent with the re-projected normals, while being as piecewise-smooth as possible overall. We compare our method with a recent voxel prediction system, demonstrating improved recovery of sharp features over a variety of man-made objects.

Video

Downloads and code

- Gitlab repo : contains the code and network model to reproduce results from the paper.

BibTex

@article{delanoy2019combining,

title={Combining voxel and normal predictions for multi-view 3D sketching},

author={Delanoy, Johanna and Coeurjolly, David and Lachaud, Jacques-Olivier and Bousseau, Adrien},

journal={Computers & Graphics},

volume={82},

pages={65--72},

year={2019},

publisher={Pergamon},

doi = {https://doi.org/10.1016/j.cag.2019.05.024},

url = {https://www.sciencedirect.com/science/article/pii/S0097849319300858}

}