Abstract

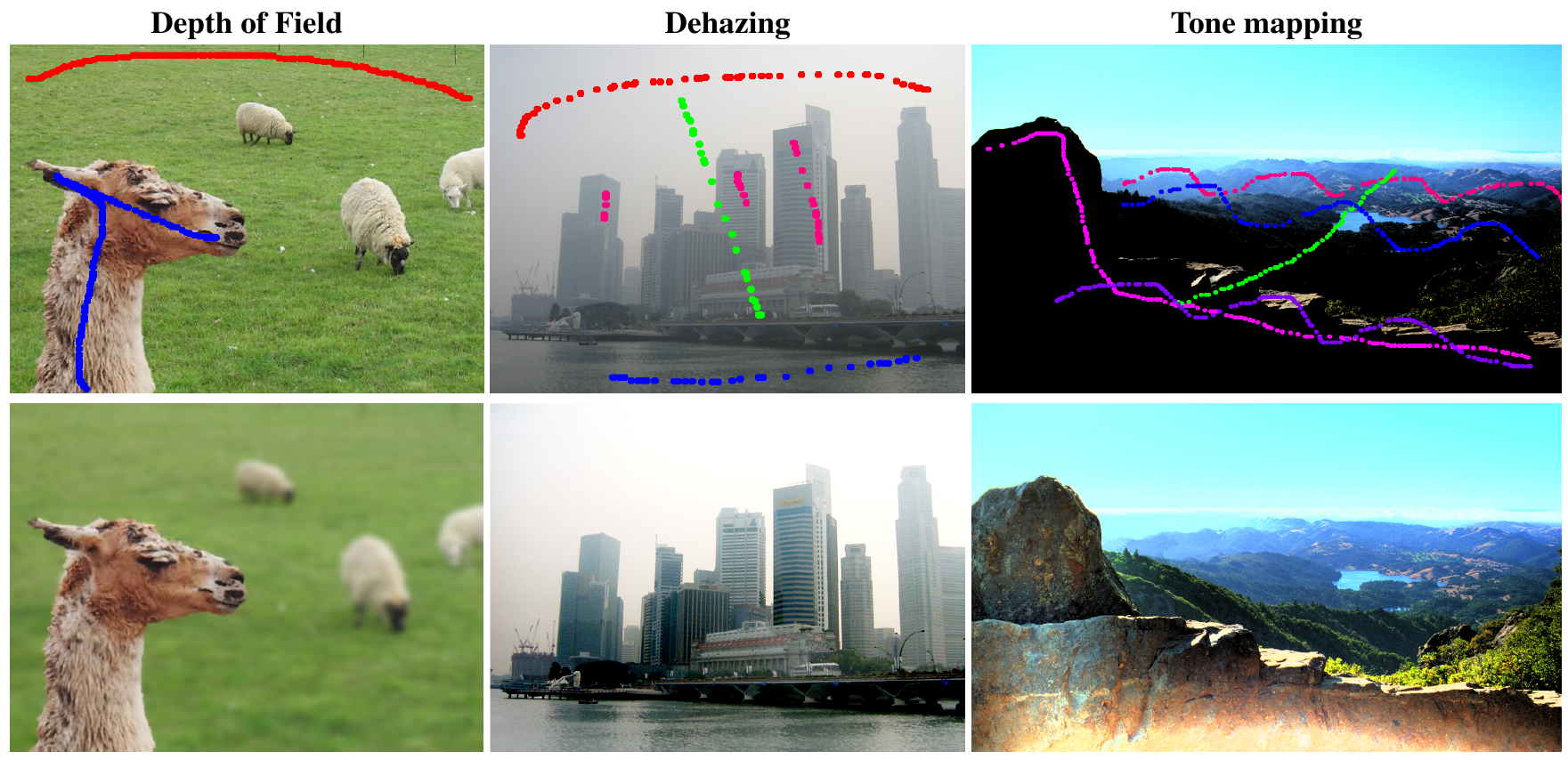

Several image editing methods have been proposed in the past decades, achieving brilliant results. The most sophisticated of them, however, require additional information per-pixel. For instance, dehazing requires a specific transmittance value per pixel, or depth of field blurring requires depth or disparity values per pixel. This additional per-pixel value is obtained either through elaborated heuristics or through additional control over the capture hardware, which is very often tailored for the specific editing application. In contrast, however, we propose a generic editing paradigm that can become the base of several different applications. This paradigm generates both the needed per-pixel values and the resulting edit at interactive rates, with minimal user input that can be iteratively refined. Our key insight for getting per-pixel values at such speed is to cluster them into superpixels, but, instead of a constant value per superpixel (which yields accuracy problems), we have a mathematical expression for pixel values at each superpixel: in our case, an order two multinomial per superpixel. This leads to a linear least-squares system, effectively enabling specific per-pixel values at fast speeds. We illustrate this approach in three applications: depth of field blurring (from depth values), dehazing (from transmittance values) and tone mapping (from brightness and contrast local values), and our approach proves both interactive and accurate in all three. Our technique is also evaluated with a common dataset and compared favorably.

Files

Source code

The source code for this paper has been released to the public domain.

BibTeX

Acknowledgements

This work is supported by the National Science Fund of China (61772209), the Science and Technology Planning Project of Guangdong Province (2016A050502050), the European Research Council (ERC) under the EU’s Horizon 2020 research and innovation programme (project CHAMELEON, grant No 682080) and the Spanish Ministry of Economy and Competitiveness (project TIN2016-78753-P).